|

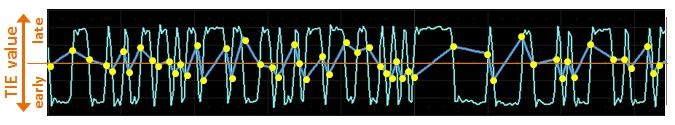

| Figure 1: An example of a time-interval error track |

As it happens, the scenario for jitter analysis was undergoing seismic change in that time frame. For one thing, data rates were spiraling upward, bringing signal-integrity concerns, and jitter analysis, to the forefront. There also was increasing usage of data-only signaling standards such as USB, which created problems to be solved in test methodologies.

Perhaps even more importantly, though, real-time oscilloscopes were coming into their own. In the early 1990s, a real-time oscilloscope might have had a memory depth of a few tens of thousands of data points. By the early 2000s, memory depth was extending into the megapoints. Concurrently, processing power in these instruments was on the rise. On the whole, that generation of real-time oscilloscopes began to point the way toward how to deal with jitter analysis going forward.

The difficulty in tail fitting arises from the need to fit two parameters: random jitter (Rj(δδ)) and deterministic jitter (Dj(δδ)). However, if we already know Rj(δδ), then we need only to find Dj(δδ). The problem, then, becomes how to separate out the Rj(δδ) tail from the Dj(δδ) tail. This is where the growing capabilities of real-time oscilloscopes became a solution to the jitter-analysis problem.

By the early 2000s, real-time oscilloscopes were being used to analyze and isolate difficult circuit problems. In embedded systems, they were key to debugging thanks to their ability to trigger on rare events or transients, and to decode serial data. Users were able to acquire very long records with which to determine when edges actually arrive and characterize jitter. This led directly to the appearance of dedicated serial-data analyzers.

During the 2000s, there were many advances in using real-time oscilloscopes to determine the arrival times of edges. There was an evolution of algorithms aimed at separating out various components of jitter. Data analysis tricks were being employed to strip out data-dependent jitter. There were attempts to insulate against the encroachment of bounded uncorrelated jitter, as well as to determine the periodic jitter components.

Behind most, if not all, of these efforts to better analyze and quantify jitter is the Dual-Dirac model covered in the previous installment of this blog series. But during the 1990s, another approach to jitter measurement began taking root: time interval error (TIE).

|

| Figure 2: The TIE track (in yellow at top) lends itself to further analysis of slowly-varying jitter elements |

From this data, other forms of analyses become available, and these forms were facilitated by the advent in the 1990s of the phase-locked loop (PLL) for clock-data recovery. With a USB signal, you have data only without a clock, and you must determine the underlying bit rate. USB is a good example of how the PLL was brought to bear (in concert with some applied math). Chips began to be built into oscilloscopes that would bring together that math and engineering to pull out that wandering, low-frequency jitter based on the bit rate. It's a little like listening to music: We tap our feet to the beat, whether faster or slower, but we track it. That's what the PLL does in clock recovery for a clockless signal.

We'll look at some of the advances in jitter analysis that stemmed from the concept of PLL-based clock-data recovery in subsequent posts.

No comments:

Post a Comment